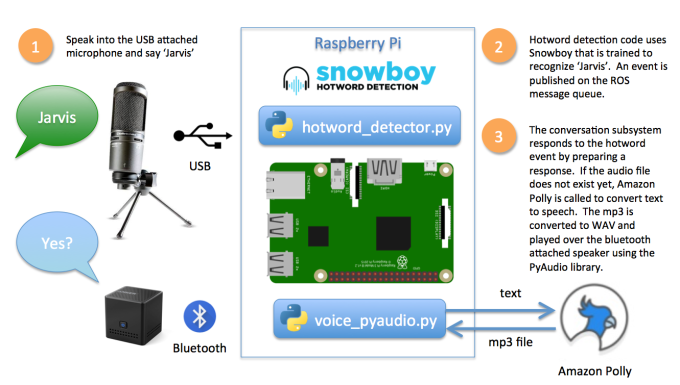

In my previous post, I described the steps to accomplish a conversational interface. That post covered the details of starting a conversation triggered by a hotword and the acknowledgement that a conversation has started. This post covers the rest of the implementation of the conversation.

In order for the robot to have a conversation, it has to do the following:

- Listen and detect when it’s name is spoken. I’ve elected to name my robot Jarvis after the computer assistant used by Tony Stark in the Iron Man series.

- Respond in a way to indicate it has recognized it’s name and is ready for me to say something. This is called hotword detection.

- Record my response as audio and convert it to text. This is called Speech to Text (STT)

- Understand the intent of what I said. This is called Natural Language Processing (NLP)

- Take some action based on the intent, including a verbal response.

This post covers steps 3 through 5. Steps 1 and 2 were covered in the previous post.

Managing the Conversation

The ConversationManager oversees the back and forth like this:

A conversation loop is started when the hotword is detected. The conversation records the user’s speech and converts it to text through the use of the Python binding to the CMU Sphinx speech recognition library called pocketsphinx. The text is sent through a natural language processing (NLP) step implemented with the api.ai services with a custom set of intents, entities and contexts. The NLP response is interpreted as a command and is routed to various specialized command handlers by the CommandProcessor. Commands result in a text response that are verbalized through the text to speech capability previously described and implemented by the PollySpeechSynthesizer. Let’s go over each step in turn.

In Search of Real Time Speech Recognition

I spent a great deal of time assessing options for very responsive speech recognition on the Raspberry Pi. I initially eliminated CMU Sphinx because the default installation would take nearly a minute to recognize one or two words. I looked at cloud-based solutions, but I wanted something that would be very responsive and available without an internet connection. Then I came across this demonstration by Alan McDonley:

I found the source code for this demonstration here and realized the performance was due to a customized and reduced vocabulary. I’ve adapted this example to work within the ROS project structure and is located here.

The reduced vocabulary is the secret to getting CMU Sphinx to work this well. Here’s how I did it:

- Create a text file contain example phrases you think the user will use. This is called the corpus file. I’ve created one for the robot in the corpus.txt file.

- Go to the Sphinx Knowledge Base Tool site. Upload the corpus file and click “Compile Knowledge Base”. This will create several files with a sequence number as the name:

- .sent – The corpus with additional formatting. This is inmoov-rpi.sent.

- .vocab – The vocabulary is the list of all words found in the corpus. This is inmoov-rpi.vocab.

- .dic – The dictionary of the pronunciations of the words in the vocabulary. This is inmoov-rpi.dic.

- .log_pronounce – A look up file for informing the engine how to pronounce the words. This is inmoov-rpi.log_pronounce.

- .lm – The language model that includes numeric parameters to use to detect the words in the vocabulary. This is inmoov-rpi.lm.

- Download files

- Update the code in pocketsphinxlistener.py to reflect the name of the downloaded files. I plan to eventually move these values to the ROS launch file to centralize the configuration values.

I encapsulated this capability within a ROS speech to text service node for use by other nodes within the robot.

Your Wish is My Command

Let’s say you want to ask the robot if there is a connection to the internet. You could say:

- Check the internet

- How’s the wi-fi?

- Is the network working?

or any number of other variations. The intent in all of these cases is to have the robot verbally respond with the status of the internet connection. The tricky part is determining the intent of the user (get status) and the context or inferred component (network). Luckily, several natural language processing (NLP) services are available including api.ai.

The api.ai service is very easy to configure. There are three essential concepts to minimally get started:

- Agent – The entry point into your custom NLP service. I’ve created a private agent called InMoov. Agents have a client ID key that is used to identify the agent you want to use. You’ll need to customize the nlphandler.py to work with your agent key.

- Entity – The the known nouns of the conversation. You can define the synonyms for each entity so that the agent can interpret the meaning of the reference. For example, the network component entity has synonyms of wireless, internet, connection, web, etc.

- Intent – Each intent defines the outcome of the NLP processing. An intent is defined by

- contexts: information that is shared across a number of interactions. You can use this to ensure the subject of the conversation is maintained. For example, you could say “Play Metallica” and then “Turn it off” and then “Turn on the porch light” and then “Turn it off”. Even though the command of “Turn it off” is identical, the context of the music player or porch light ensures your meaning can be determined.

- sample phrases: phrase that a user might say for this intent. Machine learning is used to arrive at other interpretations, so you don’t need an exact match. You can link select words within the phrases to defined entities.

- action: the action that should be taken based on the intent.

- response: a textual response like a message.

I’m using three intents:

- Fallback Intent – This is the safety net intent in case no other intents can be matched. This is where a typical response might be “I didn’t understand. Can you say that again?”.

- Control Conversation – This is a way to end the conversation through many different phrases.

- Get Status – Various ways to ask about the status of the robot or it’s components.

A JSON response is returned after calling the api.ai agent. The code navigates through the JSON response to create a ROS compatible command and any error message. The CommandProcessor interrogates the NLP response and dispatches the request to the appropriate handler based on the returned action and context. The handler performs their specific action and returns text to be spoken in response. Here is the network handler as an example. The ConversationManager directs the TTS service to speak the returned text and proceeds to wait for the next command.

Demonstration

Here is a demo of starting up the ROS after remotely logging into the Raspberry Pi and having a short conversation.