This is a milestone post for my series describing my experience building a 3-D printed InMoov robot when using the Raspberry Pi 3 and ROS. The source code is now available here. In my last post, I described the development of a user interface to adjust the position of the servos using a touch screen. Since then, I have repackaged the hardware from a breadboard implementation to a “permanent breadboard” implementation making it more compact and neat. Here is a video that highlights that progress.

Taking a Leap

The next step in this project is to integrate a Leap Motion virtual reality controller. The Leap Motion controller uses infrared cameras to monitor the position of your hands and fingers in a space in front of your monitor. The intent is to use the controller for controlling what is happening on your computer including a virtual reality. In this case, we are going to use it to have the robot hand mimic my hand.

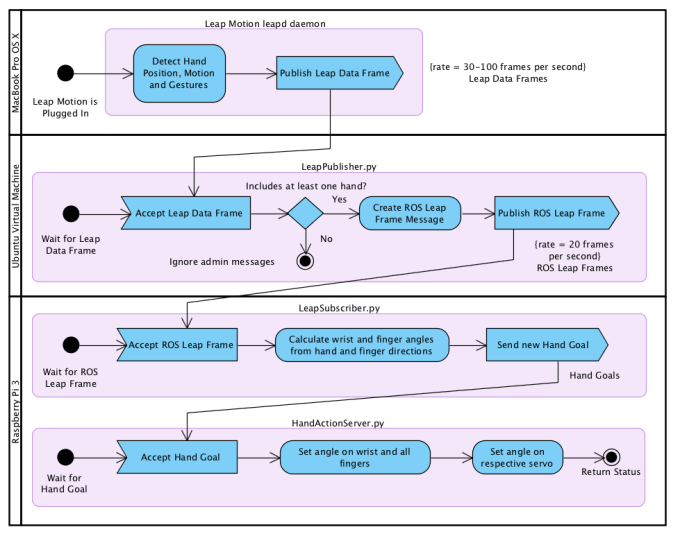

- The Leap Motion is plugged into my MacBook Pro via an USB cable. I installed the V2 Desktop SDK so that I could get the hand and finger position data through an API. The Leap Motion monitors your hands and generates up to 100 frames per second of JSON data that is available on a WebSocket API hosted by a daemon process. The documentation is above average but still has a few inconsistencies.

- As I have mentioned previously, I am running an Ubuntu virtual machine on my MacBook Pro and have installed ROS on that virtual machine. I originally intended to install ROS natively on the Mac, but I was unable to get that working. As a result, a ROS Publisher runs on the Ubuntu and is responsible for bridging the Leap Motion data with the ROS messaging infrastructure. This component converts the JSON-based Leap Motion data into a nearly complete version suitable for ROS messaging. This component cannot run on the Raspberry Pi because cannot keep up with the bandwidth generated by the Leap Motion. While converting the JSON-based message into a ROS message, the frequency of publishing is governed down to 20 frames per second.

- The Raspberry Pi subscribes to the ROS-compatible Leap Motion messages and converts the position vectors from Leap Motion into angle goals for the hand servos. This is done through the use of an ROS Subscriber (to receive the Leap messages and convert to a goal) and an ROS Action Server (to update the hand and finger positions).

The diagram below depicts these activities:

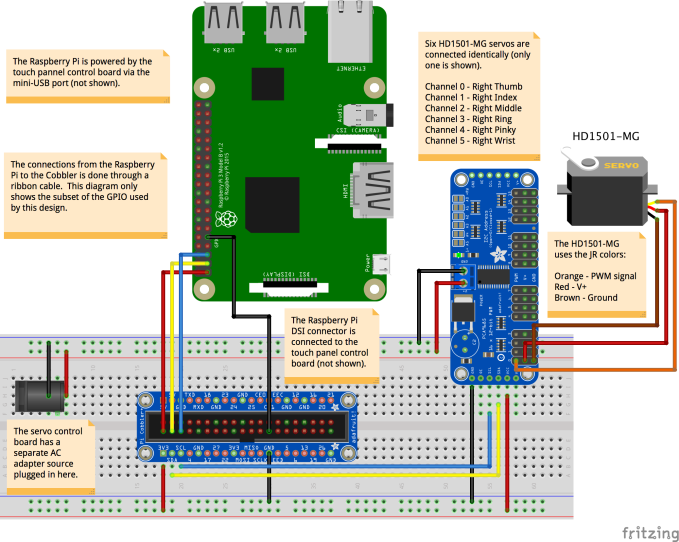

The published code depends very heavily on the physical implementation of the Raspberry Pi, the servo controller and the servo channel assignments. This diagram shows how I have assembled most of the hardware:

Here is demonstration of how well the integration works. There is a bit of a delay between my hand moving and the robot reacting and it appears that two fingers need some adjustment.

I am now at a point where I have to decide on my next steps in this project. There appears to be a minor bug in the wrist rotation, so that has been commented out for now.

Hi Mike,

I would like to know if I got this right.

In the handleMessage function, where the math is done in the code, only the following variables are used to calculate the angles:

hand.direction, hand.palmNormal, pointable.direction e pointable.extended.

Right?

Thank you,

Jayme Boarin

Yes, that is how I did it with a combination of vector calculations.

Hello Mike,

I’m just wondering why there aren’t any HandAction.msg, HandGoal.msg or HandResult.msg files in the msgs folder?

Thanks,

Diane

Hi Diane, That’s a great question. I haven’t worked on this in a while but happy that someone has taken an interest. I see that several files doing an import of those, but the CMakeLists.txt (line 53) is not building those messages and they are missing in msg. I have no idea how that could have happened but looks like my files are inconsistent. I’ll have a look at this to make sure the repo is up to date. Thanks for pointing that out.

Took me a while to remember but there is a logical explanation. See the ROS action library at http://wiki.ros.org/actionlib. Those messages are automatically generated during the build based on the Hand action in the inmoov_ros / inmoov / action / Hand.action file. That file has the definition for the goal, result and feedback.

Thank you so much, Mike! I am looking forward to implementing this project, I’d love to have your guidance.

Thanks,

Diane